VTUAV--Visible-Thermal UAV Tracking: A Large-Scale Benchmark and New Baseline

News

- 3/28/2022: The VTUAV dataset is released at Google drive.

- 4/6/2022: The VTUAV dataset is released at baidu disk.

- 4/11/2022: Paper is available at Arxiv.

- 4/15/2022: Three files(train_LT_part1, train_ST_part3, train_ST_part4) have been updated due to some mistakes. Sorry for inconvenient.

- 5/10/2022: The RGB split of VTUAV dataset for RGB tracking is released.

- 7/27/2022: The split of VTUAV dataset for Video instance segmenation (100 videos contain RGB-T image pairs with mask annotation) is released.

Highlights

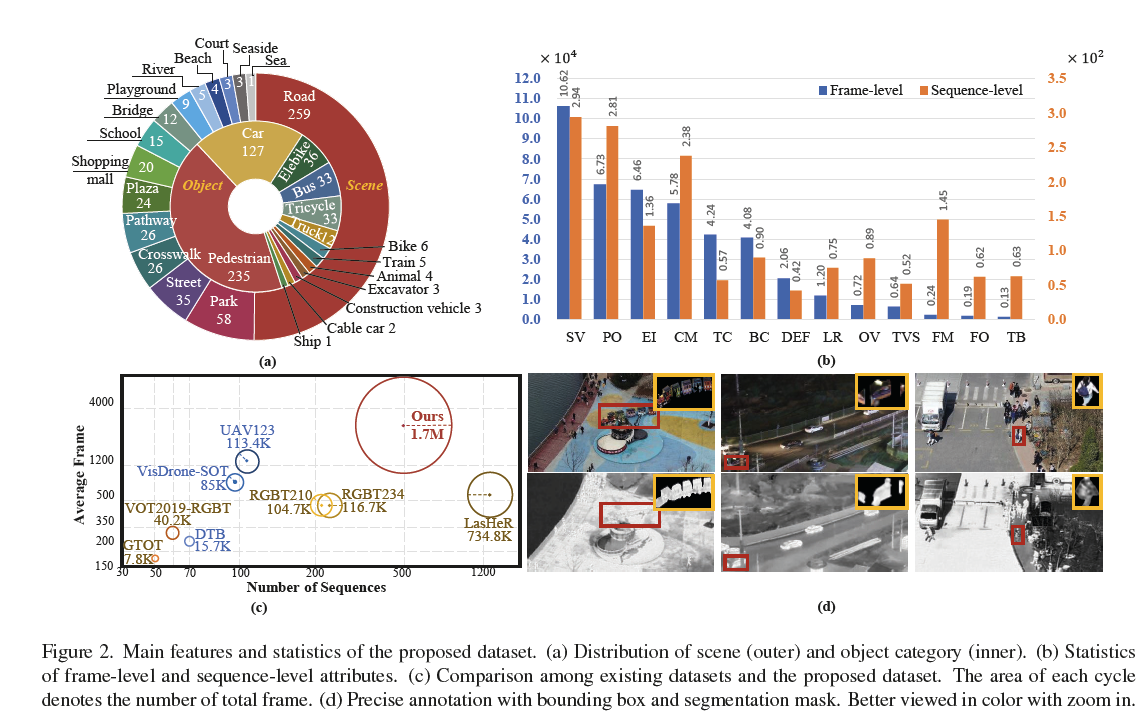

- Large-scale sequences with high diversity: Million-Scale Paired training and test frames

- Comprehensive evaluation: Short-term; long-term; pixel-level target prediction

- Generic object and scene category: 2 cities; 13-class object; 15-class scenes

- Hierarchical attributes: Sequence-level and frame-level attributes for training

Abstract

With the popularity of multi-modal sensors, visible-thermal (RGB-T) object tracking is to achieve robust performance and wider application scenarios with the guidance of objects’ temperature information. However, the lack of paired training samples is the main bottleneck for unlocking the power of RGB-T tracking. Since it is laborious to collect high-quality RGB-T sequences, recent benchmarks only provide test sequences. In this paper, we construct a large-scale benchmark with high diversity for visible-thermal UAV tracking (VTUAV), including 500 sequences with 1.7 million high-resolution (1920*1080 pixels) frame pairs. In addition, comprehensive applications (short-term tracking, long-term tracking and segmentation mask prediction) with diverse categories and scenes are considered for exhaustive evaluation. Moreover, we provide a coarse-to-fine attribute annotation, where frame-level attributes are provided to exploit the potential of challenge-specific trackers. In addition, we design a new RGB-T baseline, named Hierarchical Multi-modal Fusion Tracker (HMFT), which fuses RGB-T data in various levels. Numerous experiments on several datasets are conducted to reveal the effectiveness of HMFT and the complement of different fusion types.

Download

RGB-T version RGB version VIS Video

| Type | Baidu Disk | Google Drive | Baidu Disk | Google Drive | Baidu Disk | Google Drive | ||

|---|---|---|---|---|---|---|---|---|

| Full dataset link | link | link | link | link | link | link | ||

| train_ST_part1.zip | link | link | link | link | ||||

| train_ST_part2.zip | link | link | link | link | ||||

| train_ST_part3.zip | link | link | link | link | ||||

| train_ST_part4.zip | link | link | link | link | ||||

| train_ST_part5.zip | link | link | link | link | ||||

| train_ST_part6.zip | link | link | link | link | ||||

| train_ST_part7.zip | link | link | link | link | ||||

| train_ST_part8.zip | link | link | link | link | ||||

| train_ST_part9.zip | link | link | link | link | ||||

| train_ST_part10.zip | link | link | link | link | ||||

| train_ST_part11.zip | link | link | link | link | ||||

| train_LT_part1.zip | link | link | link | link | ||||

| train_LT_part2.zip | link | link | link | link | ||||

| train_LT_part3.zip | link | link | link | link | ||||

| train_LT_part4.zip | link | link | link | link | ||||

| test_ST_part1.zip | link | link | link | link | ||||

| test_ST_part2.zip | link | link | link | link | ||||

| test_ST_part3.zip | link | link | link | link | ||||

| test_ST_part4.zip | link | link | link | link | ||||

| test_ST_part5.zip | link | link | link | link | ||||

| test_ST_part6.zip | link | link | link | link | ||||

| test_ST_part7.zip | link | link | link | link | ||||

| test_ST_part8.zip | link | link | link | link | ||||

| test_ST_part9.zip | link | link | link | link | ||||

| test_ST_part10.zip | link | link | link | link | ||||

| test_ST_part11.zip | link | link | link | link | ||||

| test_ST_part12.zip | link | link | link | link | ||||

| test_ST_part13.zip | link | link | link | link | ||||

| test_LT_part1.zip | link | link | link | link | ||||

| test_LT_part2.zip | link | link | link | link | ||||

| test_LT_part3.zip | link | link | link | link | ||||

| test_LT_part4.zip | link | link | link | link | ||||

| test_LT_part5.zip | link | link | link | link | ||||

| test_LT_part6.zip | link | link | link | link | ||||

| test_LT_part7.zip | link | link | link | link | ||||

| test_LT_part8.zip | link | link | link | link | ||||

| test_LT_part9.zip | link | link | link | link | ||||

| test_LT_part10.zip | link | link | link | link |

Evaluation & Results

Citation

@InProceedings{Zhang_CVPR22_VTUAV,

author = {Zhang Pengyu and Jie Zhao and Dong Wang and Huchuan Lu and Xiang Ruan},

title = {Visible-Thermal UAV Tracking: A Large-Scale Benchmark and New Baseline},

booktitle = {Proceedings of the IEEE conference on computer vision and pattern recognition},

year = {2022}

}

Contact

If you have any question, please contact Pengyu Zhang at pyzhang@mail.dlut.edu.cn.